Control equals liability. Anyone who controls an area, product, or service—whether physical or digital—is responsible for what happens there (or with it). There are some exceptions to this rule, but the principle remains. For instance, the manager of a bar is liable if a customer trips over a case of wine. Similarly, the manager of a website is responsible if all users’ passwords are hacked because he stored them in a Word document. In response, many are trying to escape liability by eliminating all forms of control.

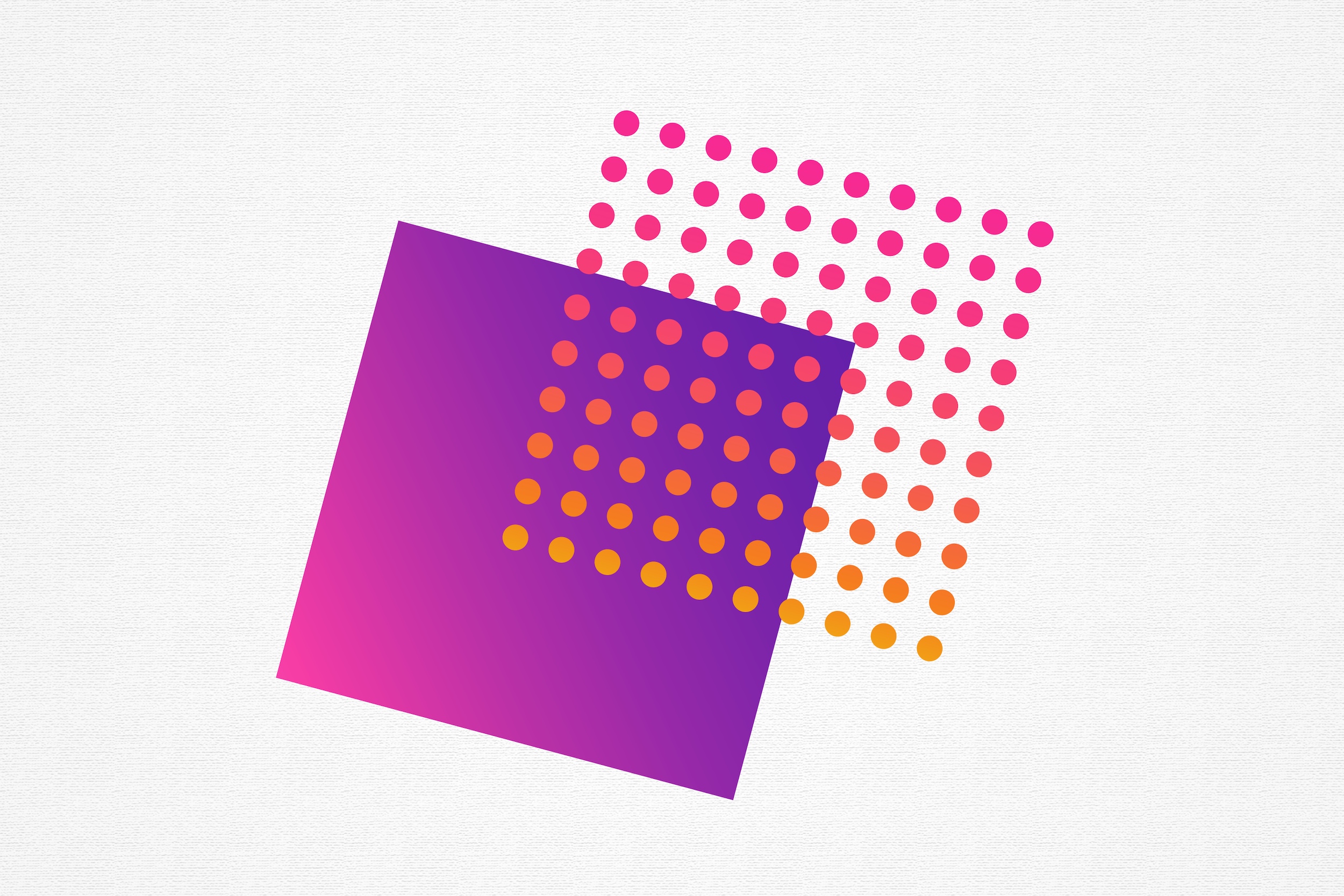

But what does it mean? How can one not be in control? Do technical and regulatory developments lead to the same response? As I shall explain, the concept of control is dynamic and multi-faceted—which is creating difficulties. To clarify why that is, let me first start with a graphic:

The x-axis represents the technical (design-related) capacity to control the use that is made of a space, product, or service. On the left, no individual can technically control it: there is no intermediary, no capacity to influence interactions. On the right, a single individual is at the center of all transactions: she or he can change them, validate some, and eliminate others. Schematically, public permissionless blockchains are on the left of the axis, while big tech is on the right.

The y-axis represents the practical (governance-related) capacity to control the use that is made of a space, product, or service. At the bottom, no individual can control interactions, because they are too many, either as they are invisible before being fully executed, or as their nature is too complex to understand. At the top, one individual controls interactions from a practical point of view. Accordingly, he or she sees them all before they are not fully executed, and, also, understands them all.

On the one hand, these two (control) capacities are related to one another. The absence of technical capacity to control interactions implies the lack of a practical one. This explains why the square in the upper left corner is greyed out. Similarly, it illustrates why blockchains do not fall at the top of the graph despite more or less informal governance systems (see this article discussing it). Without a power of command and control, the practical capacity is necessarily diminished (though not necessarily non-existent, which explains why public and private blockchains are not exactly at the same level). Finally, this shows why Wikipedia has escaped most of the discussion related to intermediary liability. Indeed, while it is technically possible to remove content from it (Wikipedia is not immutable like blockchains), the absence of clearly defined governance defeats accountability.

On the other hand, these two capacities are not strictly correlated. Indeed, the technical capacity to control interactions does not always translate into practice. To be sure, Google can easily delete certain results from its aggregator, Facebook can do the same with posts or accounts, and Amazon can eliminate dangerous products. But when billions of results, posts, and products are published every day, it becomes practically impossible to monitor them all. This explains the rationale behind Section 230, which provides immunity for website platforms from third-party content. The same logic lies behind the Digital Services Act (“DSA”). Article 3 states:

“Where an information society service is provided that consists of the transmission in a communication network of information provided by a recipient of the service, or the provision of access to a communication network, the service provider shall not be liable for the information transmitted on condition that the provider: (a) does not initiate the transmission; (b) does not select the receiver of the transmission; and (c) does not select or modify the information contained in the transmission.”

Such reasoning, however, is very much related to the state of technical advances. If a digital giant develops a system that allows the identification of illegal content before it is posted, that company will regain a practical capacity to control, and thus, potential liability. Therefore, one can imagine that, in 5 or 10 years from now, Article 3 of the DSA (which is technically sound in 2021) will give way to a liability principle as soon as illegal content is published (for example, NLP systems can be developed to detect and prevent racist, misogynist, insulting… content).

The case will remain more complex when governance is (more) decentralized. Wikipedia and blockchains are good examples. Even assuming that technical advances are available, one will not be able to impose liability in the absence of users grasping—effectively—that technical capacity to control interactions. Of course, some of these users will always try to seize control in order to pilot the product or service (which can be valuable from a business perspective). This, however, might be not effective enough if the rest of the community keeps or creates the technical means to resist their control. Now, what do we do in a world where one cannot impose liability when something goes wrong? Do we do… nothing? Do we force people to be in charge so that they become liable? Neither of these two solutions seems good to me. I’ll come back to you very soon on that…

Dr. Thibault Schrepel

@LeConcurrential

***

Citation: Thibault Schrepel, “Control = liability”: exploring Section 230, the DSA, Big Tech, Wikipedia and Blockchains, CONCURRENTIALISTE (March 9, 2021)