Legal scholars have long been interested in predicting the effects of new rules and standards. They have focused very little on the timing of regulation. In a recent working paper co-authored with John Schuler, we explore how agent-based modeling can help.

Agent-based modeling (“ABM”), as we explain, is a computer simulation with unique agents that respond to the behaviors and strategies of other agents. As such, ABM is a tool for better understanding complex adaptive systems, which we illustrate by modeling an ecosystem in which agents search for online information. Agents in the ecosystem fall along a spectrum of privacy/quality sensitivity. Some are privacy fundamentalists. Some care only about quality. Most are somewhere in between.

We first simulate an environment with the existence of a single firm (we call it Google) that prioritizes the quality of search results (represented by speed, i.e., the time it takes to identify the answer users are looking for) over privacy. We can then introduce any of the following changes in the competitive and/or regulatory environment, in any order:

- A new entrant that prioritizes privacy over learning, resulting in a search engine that is less efficient (i.e., takes longer to find desired results) but more privacy-preserving.

- A privacy mandate that requires organizations to delete individual data upon request, similar to Article 17 of the EU General Data Protection Regulation.

- An antitrust provision that allows consumers to transfer their personal data to another platform, similar to the data portability provision in Article 6.9 of the Digital Markets Act.

- A virtual private network (“VPN”) that allows users to hide their true identity, thereby protecting their privacy.

The proliferation of different privacy mechanisms (e.g., DuckDuckGo, mandatory data deletion, or use of VPNs) spreads throughout the network, with agents observing the actions of their peers and constantly reevaluating their choices.

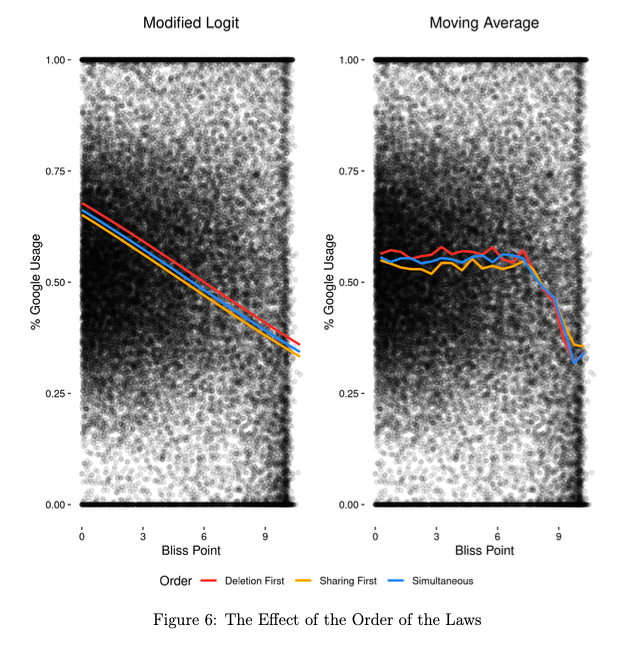

What we find when we run the simulation has important implications for policymakers. Not only do we find that the privacy mechanisms benefit Google (because, in short, they make Google more privacy-friendly), but we also find that introducing the deletion rule first (and the antitrust rule second) moves the ecosystem to a state where Google usage is highest. Conversely, introducing the sharing rule first (and the deletion rule second) moves the ecosystem in a direction where DuckDuckGo usage is more popular because it allows for learning effects that are not (fully) compensated by the deletion rule.

Because agent-based regulatory and enforcement policies are more realistic than average-based policies (i.e., policies that rely on an imaginary, static, average user or consumer), our working paper concludes with institutional steps for policymakers and regulators to use ABM. We list four of them: (1) design rules and standards that are computable, (2) anticipate the validity (and limits) of computable evidence in legal proceedings, (3) link ABM to real-world data, and (4) protect the rights of the defense. More details and information can be found in the working paper.